ATT (Audio Transcription Toolkit)

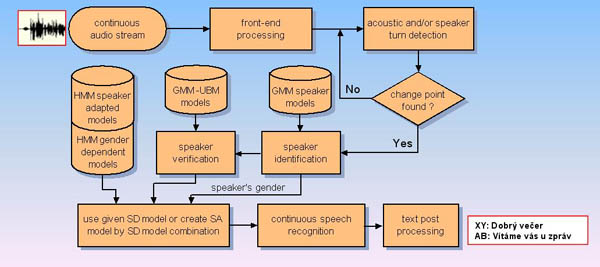

The system ATT is rather complex and its architecture is composed from several modules. Here, we will mention just those functions that are relevant from user’s point of view. The system can process acoustic data that are either stored in previously recorded files or that come directly from on-line sources, such as a microphone, TV/radio card or internet stream broadcasting. The audio data are sampled at 16 kHz rate with 16 bit resolution and stored without any compression to assure the best performance of voice-to-text algorithms. In case of TV shows, the video part is not stored, instead the link to the web source is registered. The first module converts the audio signal into a stream of feature vectors. These are 39-dimensional mel-frequency cepstral coefficients (MFCCs) computed every 10 ms within 25-ms long frame. The vector includes 13 static, 13 delta and 13 delta-delta coefficients. Cepstral mean subtraction (CMS) is applied within a 4-second long sliding window. These MFCC parameters are used in all subsequent modules, i.e. for speaker change detection, speaker identification as well as for speech recognition. The second module searches for significant changes in audio characteristics. This information is used primarily for identification of speech and non-speech parts of the signal.

The latter are simply labeled as “silence”, “music”, “noise”, or “other” and skipped without further processing. The former are subsequently split into segments spoken by an individual speaker. The next module gets these segments and tries to determine the speaker identity. In the recent version, we use a database of 300 speakers and their voice characteristics. The database is made of the persons who often occur in broadcast media, namely TV and radio speakers, politicians, member of the government, MPs. The module is able to identify these people with accuracy about 88 %. If the speaking person is not in the database or the system is not sure about his or her identity, the module provides at least the information about the gender of the speaker.

The main module performs the speech recognition task. To make the transcription as accurate as possible, the recognizer utilizes the information about the speaker’s identity. For each known speaker, his or her adapted acoustic model is used. For the other ones, a general male or female model is applied. The recognizer employs a large lexicon containing the most frequently occurring Czech words and word-forms and a statistical language model, which statistically represents relations between N adjacent words. The recent version contains about 500.000 words and the language model is made mainly of bigrams (N=2). Anyway, because we added also the most frequent multi-word strings to the vocabulary (items like, e.g. „New York“, „Czech Republic“, „it is“, or „in Prague“), the language model actually covers many combinations of 3 or 4 adjacent words. The output of the module is a string of words that matches best the given signal segment. Our large study has shown that transcription accuracy is about 95 percent for professional speakers speaking in a studio room, about 85 to 90 % for other people speaking in quiet environment and less than 80 % for noisy and spontaneous speech recorded, for example, on streets. Even though this accuracy varies in a large scale, it is still acceptable if our target application is the search rather than exact transcription. The next module in the system hierarchy is responsible for what is called text post-processing. This means conversions of number strings into corresponding digit strings, capitalization of proper names, text formatting, etc. The last module stores all the transcriptions in the way that allows for later searches. Each program, each speaker’s segment, each utterance and each word gets its time stamp. It is a pair of two values, a starting and ending time measured in milliseconds and related to the beginning of the recording. Each segment further carries information about the identified speaker and his or her gender. For each recognized word we also store its pronunciation form, which is useful in case when a word may occur in more pronunciation variants.

- Nouza, J., Zdansky, J., Cerva, P.: System for automatic collection, annotation and indexing of Czech broadcast speech with full-text search. In: proc. of the 15th IEEE Mediterranean Electrotechnical Conference - MELECON 2010. Malta, 25.-28. April 2010, pp. 202-205, ISBN: 978-1-4244-5793-9