Real-time transcription of radio streams in various languages

During the last two decades, we have been developing automatic speech recognition (ASR) systems for various applications and languages.

They use state-of-the-art technologies, mainly deep neural networks, at several levels including speech/non-speech or speaker change-point detection, acoustic modelling, etc.

On the next page, you can listen to the selected radio stations and watch the corresponding automatic transcriptions.

Some hints before you proceed:

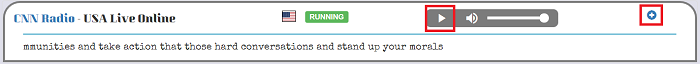

- You can listen to the selected radio station by clicking one of the Play buttons.

- You can see a more detailed view of the transcripts by pressing the corresponding + button.

- To see and hear synced text and audio, check the sync between your computer's time and world time.

- You may not hear sound for all stations in the latest version of Chrome. In Firefox, all stations should run OK.

Click here to start.

This application has been created within two large research projects named "MultiLinMedia" and "DeepSpot" supported by the Czech Technology Agency.

Related publications:

- Mateju, L., Cerva, P., Zdansky, J.: An Approach to Online Speaker Change Point Detection Using DNNs and WFSTs, INTERSPEECH, pp. 649-653, 2019.

- Malek, J., Zdansky, J., Cerva, P.: Robust Recognition of Speech with Background Music in Acoustically Under-Resourced Scenarios, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5624-5628, 2018.

- Mateju, L., Cerva, P., Zdansky, J., Safarik, R.: Using Deep Neural Networks for Identification of Slavic Languages from Acoustic Signal, INTERSPEECH, pp. 1803-1807, 2018.

- Malek, J., Zdansky, J., Cerva, P.: Robust Automatic Recognition of Speech with background music, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5210-5214, 2017.

- Mateju, L., Cerva, P., Zdansky, J., Malek, J.: Speech Activity Detection in online broadcast transcription using Deep Neural Networks and Weighted Finite State Transducers, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5460-5464, 2017.

- Nouza, J., Safarik, R., Cerva, P.: ASR for South Slavic Languages Developed in Almost Automated Way, INTERSPEECH, pp. 3868-3872, 2016.

- Seps, L., Malek, J., Cerva, P., Nouza, J.: Investigation of deep neural networks for robust recognition of nonlinearly distorted speech, INTERSPEECH, pp. 363-367, 2014.

- Cerva, P., Silovsky, J., Zdansky, J., Nouza, J., Seps, L.: Speaker-adaptive speech recognition using speaker diarization for improved transcription of large spoken archives, Speech Communication, 55, pp. 1033-1046, 2013.